OpenAI has launched a tool for detecting text generated using services like its own ChatGPT.

Generative AI models used for services like ChatGPT have raised many societal and ethical questions: Could they be used to generate misinformation on an unprecedented scale? What if students cheat using them? Should an AI be credited where excerpts are used for articles or papers?

A paper (PDF) from the Middlebury Institute of International Studies’ Center on Terrorism, Extremism, and Counterterrorism even found that GPT-3 is able to generate “influential” text that has the potential to radicalise people into far-right extremist ideologies.

OpenAI was founded to promote and develop “friendly AI” that benefits humanity. As the creator of powerful generative AI models like GPT and DALL-E, OpenAI has a responsibility to ensure its creations have safeguards in place to prevent – or at least minimise – their use for harmful purposes.

The new tool launched this week claims to be able to distinguish text written by a human from that of an AI (not just GPT). However, OpenAI warns that it’s currently unreliable.

On a challenge set of English texts, OpenAI says its tool only identifies 26 percent of AI-written text as “likely AI-written”. The tool falsely classifies human-written text around nine percent of the time.

“While it is impossible to reliably detect all AI-written text, we believe good classifiers can inform mitigations for false claims that AI-generated text was written by a human: for example, running automated misinformation campaigns, using AI tools for academic dishonesty, and positioning an AI chatbot as a human,” says OpenAI.

“[The tool] should not be used as a primary decision-making tool, but instead as a complement to other methods of determining the source of a piece of text.”

Other key limitations of the tool are that it’s “very unreliable” on short texts under 1,000 characters and it’s “significantly worse” when applied to non-English texts.

OpenAI originally provided access to GPT to a small number of trusted researchers and developers. While it developed more robust safeguards, a waitlist was then introduced. The waitlist was removed in November 2021 but work on improving safety is an ongoing process.

“To ensure API-backed applications are built responsibly, we provide tools and help developers use best practices so they can bring their applications to production quickly and safely,” wrote OpenAI in a blog post.

“As our systems evolve and we work to improve the capabilities of our safeguards, we expect to continue streamlining the process for developers, refining our usage guidelines, and allowing even more use cases over time.”

OpenAI recently shared his view that there is too much hype around GPT’s next major version, GPT-4, and that people are “begging to be disappointed”. He also believes that a video-generating model will come, but won’t put a timeframe on when.

Videos that have been manipulated with AI technology, also known as deepfakes, are already proving to be problematic. People are easily convinced by what they think they can see.

We’ve seen deepfakes of figures like disgraced FTX founder Sam Bankman-Fried to commit fraud, Ukrainian President Volodymyr Zelenskyy to disinform, and US House Speaker Nancy Pelosi to defame and make her appear drunk.

OpenAI is doing the right thing by taking its time to minimise risks, keeping expectations in check, and building tools for uncovering content that falsely claims to have been created by a human.

You can test OpenAI’s work-in-progress tool for detecting AI-generated text here. An alternative called ‘DetectGPT’ is being created by Stanford University researchers and is available to demo here.

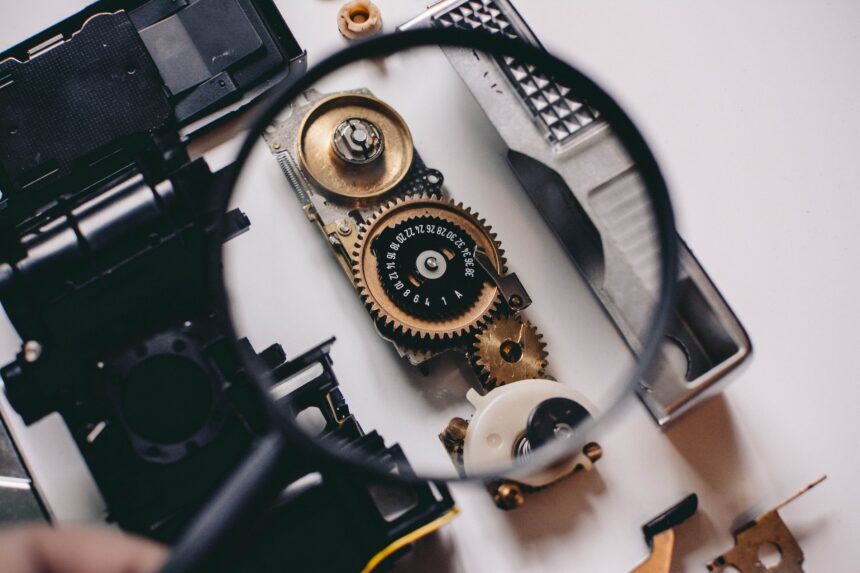

(Photo by Shane Aldendorff on Unsplash)

: OpenAI opens waitlist for paid version of ChatGPT

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

Read the full article here