Introduction

Classifying emotions in sentence text using neural networks involves attributing feelings to a piece of text. It can be achieved through techniques like neural networks or lexicon-based methods. Neural networks involve training a model on tagged text data to predict emotions in new text. Lexicon-based methods use emotion-associated word dictionaries. Though challenging, text emotion classification has numerous potential applications.

The main objective of doing text emotions classification is:

- To understand the emotional state of the author. This can be helpful in a variety of contexts, such as customer service, healthcare, and education.

- To improve the accuracy of machine translation systems. Machine translation systems can often struggle to correctly translate text that is emotionally charged.

- To develop new applications for social media and other online platforms. For example, text emotions classification can be used to recommend content to users based on their emotional state.

Based on these goals, we will classify the emotions in sentence text using Neural Network algorithm to develop a model that can accurately classify the emotional state on text. This article will guide you through step by step classifying text user input with specific emotions.

Step 1: Import Library

import pandas as pd

import numpy as np

import keras

import tensorflow

from keras.preprocessing.text import Tokenizer

from tensorflow.keras.preprocessing.sequence import pad_sequences

from sklearn.preprocessing import LabelEncoder

from sklearn.model_selection import train_test_split

from keras.models import Sequential

from keras.layers import Embedding, Flatten, DenseWe import ‘Tokenizer’ to convert the text into a sequence of tokens. The ‘pad_sequences’ is used to pad sequences to a fixed length. It is necessary because neural networks expect inputs of a fixed size. The ‘LabelEncoder’ is used to convert categorical data into numerical data. The Sequential is used to create a linear stack of layers. Then, the ‘Embedding’ is used to convert words into vectors to represent the meaning of words. The ‘Flatten’ is used to flatten a multidimensional tensor into a 1D tensor. Finally, the ‘Dense’ is used to apply a non-linear transformation to an input tensor.

Step 2: Read the Data

The dataset that Praveen uploaded on Kaggle is suitable for the task of classifying emotions in text, which is very appropriate in this case. However, I put it on my GitHub to facilitate further analysis.

url = "https://raw.githubusercontent.com/ataislucky/Data-Science/main/dataset/emotion_train.txt"

data = pd.read_csv(url, sep=';')

data.columns = ["Text", "Emotions"]

print(data.head())

The code describes how to read a text file from a URL and store it in a Pandas DataFrame. The text file contains a list of sentences and the emotion labels. So, the dataset we use only consists of two columns.

Step 3: Data Preprocessing

The pre-processing of data is an important step in the classification of text emotions. This includes the process of cleaning and preparing the data for use by machine learning models. Some common data pre-processing steps for text emotion classification include tokenization, stop word removal, lemmatization, etc. In general, the challenges in carrying out the initial processing process are data cleaning, data selection, and data formats.

The tokenizer is a function that breaks up a text string into individual words or tokens. Therefore, to mark text strings, the string data type must first be changed to a list. This is because a list is a collection of objects, and each object in a list can be a word or a token.

texts = data["Text"].tolist()

labels = data["Emotions"].tolist()The tokenizer object is then aligned with the text list. It aims to learn unique tokens in text data. By tokenizing text data, tokenizer objects can convert text data into a format usable by machine learning models.

# Tokenize the text data

tokenizer = Tokenizer()

tokenizer.fit_on_texts(texts)Now, all we need to do is layer the sequences of equal length and feed them into the neural network. Here’s how we can layer a sequence of texts so that they are the same length:

sequences = tokenizer.texts_to_sequences(texts)

max_length = max([len(seq) for seq in sequences])

padded_sequences = pad_sequences(sequences, maxlen=max_length)Next, we will use the label encoder method to convert the data type from string to numeric data.

# Encode the string labels to integers

label_encoder = LabelEncoder()

labels = label_encoder.fit_transform(labels)We then perform one-hot coding to represent categorical data in a machine learning model. This is because many machine learning models, such as neural networks, expect input data to be in a numeric format. Let’s do it.

# One-hot encode the labels

one_hot_labels = keras.utils.to_categorical(labels)Step 4: Build Model and Make Predictions

After going through pre-processing, we will then start creating machine learning models.

We may implement a technique, namely splitting the dataset into a training set and a test set, to make it simpler to assess model performance on data that has never been seen before. The ability to verify that the model is not overfitting the training set of data makes this crucial.

# Split the data into training and testing sets

xtrain, xtest, ytrain, ytest = train_test_split(padded_sequences,

one_hot_labels,

test_size=0.2)Let’s now define the neural network architecture to train the model and classify emotions.

# Define the model

model = Sequential()

model.add(Embedding(input_dim=len(tokenizer.word_index) + 1,

output_dim=128, input_length=max_length))

model.add(Flatten())

model.add(Dense(units=128, activation="relu"))

model.add(Dense(units=len(one_hot_labels[0]), activation="softmax"))

model.compile(optimizer="adam", loss="categorical_crossentropy", metrics=["accuracy"])

model.fit(xtrain, ytrain, epochs=10, batch_size=32, validation_data=(xtest, ytest))

The model is trained for ten epochs, with each epoch consisting of training the model on training data and then assessing it on validation data. Data validation is used to assess model performance against previously unseen data. This is significant since it ensures that the model does not overfit the training data.

After the model is trained, it is ready to be used to make predictions on new data.

#input_text from user

input_text = input("Please input sentence here : ")

# Preprocess the input text

input_sequence = tokenizer.texts_to_sequences([input_text])

padded_input_sequence = pad_sequences(input_sequence, maxlen=max_length)

prediction = model.predict(padded_input_sequence)

predicted_label = label_encoder.inverse_transform([np.argmax(prediction[0])])

print(predicted_label)

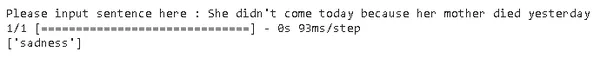

In the resulting output, the user enters the sentence “She didn’t come today because her mother died yesterday”. The model predicts that the sentiment of the sentence is sadness.

It is trained on a dataset of sentences labeled with their sentiments. This model learns to associate certain words and phrases with certain sentiments. The term “died” is frequently connected with sadness. When the algorithm detects the word “died” in a phrase, it most likely predicts sadness.

Conclusion

This article starts with pre-processing, which includes changing the dataframe format to a list, the tokenizing process, encoder labels, etc. By the way, in this post, we have discussed the following:

- Classifying emotions in sentence text using neural networks can categorize emotions expressed in textual data.

- Feature engineering plays an important role in the classification of text emotions because extracting relevant features from text can improve model performance.

- Train a neural network model on labeled text data to learn archetypes and associations between text and corresponding emotions.

- Neural networks offer the advantage of capturing complex patterns and relationships in text data, thereby enabling accurate classification of emotions.

Overall, this article provides a comprehensive guide to classifying text emotions with a neural network using Python. Feel free to ask valuable questions in the comments section below. The full code is here.

By Analytics Vidhya, May 27, 2023.